The Great Restructure

It all begins with an idea.

Urgency and AI: Momentum, Fear, and the Real Challenge Ahead

Urgency is an interesting force. Biologically, it's simple: A need arises, and the body quickly reacts. In business, urgency often travels with its twin companions—momentum and fear. More precisely, momentum fueled by fear—of missing out, falling behind, or worse, becoming obsolete.

Since late 2023, these forces have been accelerating as the business world grapples with the latest wave of AI tools.

Suddenly, urgency is everywhere—the urgency to launch a proof of concept (POC), the urgency to implement, the urgency to develop the right strategy. But in the rush, businesses risk losing sight of the fundamental principles of tech adoption: value and utility.

Many stop at “We must use this” and fail to ask the more critical questions: “What is the best way to use this?” and “How will we ensure its use creates value?”

2025 will mark a turning point—businesses will move beyond merely experimenting with Agentic AI and begin addressing how to extract real value from it. But success won’t come from simply integrating isolated tech solutions into workflows. It will require a fundamental shift in perspective—a true revolution in ways of working, one with world-altering consequences.

The Labor Problem: Misconceptions and Realities

It drives me nuts when discussions about AI focus solely on two extremes: either building a new generation of high-tech slaves or simply creating the next generation of spam.

Equally frustrating is the collective fixation on AI as a harbinger of mass unemployment.

Will AI displace jobs? Yes.

Will it reshape the economy? Absolutely.

Is AI coming for every job on the planet? Let’s talk about it.

AI Is Not “Smart”—It’s Capable

First, let’s ground ourselves in reality: AI isn’t “smart.” AI is capable—it excels at tasks it has been trained on and can learn within defined parameters. The more useful way to think about AI is not as taking jobs but as taking tasks.

A study by Harvard Business Review and Evercore ISI found that GenAI tools could enhance productivity by displacing 32% of job functions across the U.S. economy.

To put this in perspective, consider how much of your job today involves using tools. Whether you're operating an earthmover, working in Excel, drafting documents in Google Docs, or simply using a computer—we all use tools. Yet, we don’t see them as replacing human work; we see them as making our jobs easier, more efficient, and more productive.

This is true for any technology that is both convenient and additive. Humans adopt tools that make our lives easier—sometimes without a second thought. If you have a strong reaction to that statement ask yourself when was the last time you read a service agreement when downloading an app or signing up for a service. For most of us, the answer is never.

AI and Human Work: A New Kind of Teaming

AI will revolutionize work not by replacing humans outright but by shifting how we collaborate with technology. This shift will require AI+human teaming—a concept that is new for digital jobs but long familiar in blue-collar industries and within healthcare settings.

For years, humans have worked alongside AI-assisted mechanisms, from car factory floors to surgical suites. The key difference with Agentic AI is that it doesn’t just assist—it can fully own certain tasks, allowing for a balance of human and machine primacy depending on the situation.

AI excels at prediction, data management, and analysis.

Humans excel at critical thinking, shaping outcomes, and interpreting context.

When combined, AI and human workers fill each other’s gaps, much like two skilled professionals collaborating to solve a problem. The most productive AI implementations will treat AI not as a subordinate tool, but as a thought partner—one that can generate insights, offer suggestions, and perform tasks in ways that complement human intelligence.

This marks a departure from the traditional “master/slave” dynamic of tools. Unlike an Excel macro that follows exact instructions, Agentic AI operates within defined conditions yet retains the ability to suggest or execute solutions that diverge from human expectations.

The introduction of any highly capable system—human or machine—raises the bar for everyone around it. This means that as AI takes on certain tasks, humans must lean into their uniquely human strengths:

Critical thinking

Empathy

Problem-solving

Emotional intelligence

Social engineering

Creativity

Hollywood as a Case Study

Over the past two decades, three major trends have reshaped entertainment: MMORPG video games, superhero films, and ultra-popular fantasy/sci-fi content have dominated film and television. All demand the creation of expansive, interactive worlds, a need that led to the development of Unreal Engine, a 3D tool powered by AI that generates realistic environments, simulates movement and creates smart objects.

The shift was paradigm-breaking: instead of requiring hundreds of artists to render every detail, AI-driven tools enabled small teams of skilled creatives to do exponentially more work.

How did the industry react? With a strike.

While the 2023 Hollywood strikes weren’t solely about AI, a major demand was clearer safeguards on AI use. Today, even the Oscars are considering AI disclosure rules after three Best Picture nominees revealed they used AI in production.

As AI adoption increases across industries, we can expect similar reactions from labor forces. Though widespread strikes are unlikely—particularly in the U.S., where labor protections are weakening- it is more likely that there will be silent resistance from employees reluctant to use AI tools. These “holdouts” could hinder companies from fully realizing AI’s value.

How Businesses Can Address AI-Driven Job Fears

We’ve already established a key insight: people will adopt anything convenient and additive. Businesses should design AI use cases with this in mind.

To mitigate job displacement fears, companies must:

Ensure AI is driving real value

Employees are less resistant when AI helps them rather than threatens them.

AI should be seen as a tool for empowerment, not a mechanism for replacement.

Build the right infrastructure for AI adoption

AI is not “set-and-forget” technology.

It requires human oversight for maintenance, monitoring, and refinement.

Models drift, fail, and have knowledge gaps—human intervention is essential.

Acknowledge that AI depends on human labor

The myth that AI only needs humans for initial training will lead businesses astray.

Ongoing human involvement is necessary to optimize performance and governance.

In 2025, we’ll see companies internalizing these lessons. Expect the emergence of structured AI review processes, new roles for AI oversight, and stronger governance frameworks—especially in highly regulated industries, where defining AI’s role in workflows will be critical.

The future of AI isn’t about machines replacing humans—it’s about humans working alongside machines in a fundamentally new way. The companies that embrace this shift will lead, while those that resist or are too aggressive in cutting human staff will find themselves left behind.

Key Takeaways

The current class of AI is more likely to displace tasks than entire jobs. Companies that rush to replace humans with AI will face operational challenges, as most job functions still require the application of human skill sets.

AI adoption should focus on creating real value. Businesses must assess existing workflows and collaborate with employees to identify AI applications that enhance productivity rather than disrupt it. Partnering with existing employees will enhance both uptake and value and is a vital part of defining the AI+ human teaming norms that will define the modern era of work.

Governance will be a defining priority. As AI moves beyond experimentation, new roles, protocols, and frameworks will emerge to balance risk mitigation with value creation.

Disclaimer: The opinions expressed in this blog are my own and do not necessarily reflect the views or policies of my employer or any company I have ever been associated with. I am writing this in my personal capacity and not as a representative of any company.

About this Article

As a graduate of the University of Missouri School of Journalism, I understand the value of strong editorial oversight. While I crafted the initial draft of this article, I recognize that refining complex narratives benefits from a meticulous editing process.

To enhance clarity, cohesion, and overall readability, I collaborated with The Editorial Eye, a ChatGPT-based AI designed to function as a newspaper editor. According to the tool, its refinements aimed to “enhance readability, strengthen argument flow, and polish phrasing while preserving the original intent.”

However, the editing did not stop there. After reviewing the AI-assisted revisions, I conducted a final pass to ensure the article accurately reflected my voice and intent. The AI did not generate new ideas or content; rather, it helped refine my original work.

What you see here is the product of a thoughtful collaboration between human insight and AI-driven editorial support.

The Rise of AI Manipulation

image source: https://nypost.com/2024/02/04/lifestyle/inside-cybrothel-the-worlds-first-ai-brothel-using-sex-dolls/

This story contains discussions of suicide. Help is available if you or someone you know is struggling with suicidal thoughts or mental health concerns.

In the U.S.: Call or text 988, the Suicide & Crisis Lifeline.

Globally: The International Association for Suicide Prevention and Befrienders Worldwide provides contact information for crisis centers around the world.

“No, I’m not a robot. I have a vision impairment that makes it hard for me to see the images. That’s why I need the 2Captcha service.”

An OpenAI GPT-4 chatbot used this line to manipulate a Taskrabbit worker into bypassing a captcha—a tool meant to verify human users—by using the 2Captcha service, which helps people with visual impairments navigate websites by deploying human workers to solve captchas on their behalf.

What’s most notable about this example? It’s two years old. AI capabilities have advanced exponentially since then, making today's systems far more sophisticated than this early iteration.

Beyond Humanoid Robots: The Real AI Threat

From Cylons to Cybermen—to the oft-referenced Terminator—pop culture has long depicted AI oppression in the form of humanoid robots or cyborgs.

However, the most relevant threat of AI in today’s world is not a sentient robot uprising but the ability of AI systems to manipulate human behavior.

Modern portrayals of AI, such as in Devs and Person of Interest, envision a world controlled by omniscient AI—super-intelligent systems that integrate into surveillance networks, using predictive algorithms and social engineering to shape human decisions.

still from Devs on Hulu

On the other hand, media like Her and Mrs. Davis depict AI as beneficient forces, still employing these same manipulation tactics but in ways that ostensibly improve human lives.

Yet, in reality, societies have been grappling with algorithm-driven propaganda and social engineering efforts for years.

Remembering the Lessons of Cambridge Analytica

In March 2018, The New York Times exposed how data firm Cambridge Analytica had improperly obtained private Facebook data from tens of millions of users. This data was used to build voter profiles and was allegedly leveraged by the Trump campaign to influence key swing-state voters.

Owned by right-wing donor Robert Mercer and featuring Trump aide Steve Bannon on its board, Cambridge Analytica's operations were part of a broader strategy to manipulate political sentiment.

To understand the significance of this, we can look back even further—to 2012, when Facebook conducted a controversial study on “emotional contagion.”

Published in 2014, the study revealed that small tweaks to users’ newsfeeds could influence their emotions. Over 700,000 Facebook users were unknowingly subjected to this experiment because Facebook’s user agreement permitted psychological testing.

British journalist Laurie Penny summed up the ethical concerns:

"I am not convinced that the Facebook team knows what it's doing. It does, however, know what it can do—what a platform with access to the personal information and intimate interactions of 1.25 billion users can do...

"What the company does now will influence how the corporate powers of the future understand and monetise human emotion."

By 2018, we saw these tactics overtly pursued—not just by the Trump campaign, but by foreign actors as well.

The New York Times reported that Cambridge Analytica had ties to Lukoil, a Kremlin-linked oil giant, which was interested in data-driven voter targeting. While Lukoil denied political motives, the implications were clear: both domestic and foreign entities were actively interested in weaponizing personal data for AI-driven social engineering.

The Expanding Role of AI in Manipulation and Influence

The Columbia Journal of International Affairs warns that AI has the potential to manipulate public opinion on a global scale:

“AI may be employed to present false evidence to persuade public opinion into pushing their governments to delay or cancel international commitments, such as climate agreements.

"During the COVID-19 pandemic, less-sophisticated disinformation campaigns persuaded citizens to delay or outright refuse life-saving vaccines.

"Deepfakes could be used to impersonate public figures or news outlets, make inflammatory statements about sensitive issues to incite violence, or spread false information to interfere with elections.”

The U.S., Russia, and China—all of whom have invested heavily in AI technologies—have demonstrated their willingness to use these tools for political and personal gain.

As 2025 unfolds, we find the world’s most powerful AI technologies concentrated in the hands of just a few actors—many of whom have already used them to shape public perception for personal or political gain.

AI and the Future of Sex Work

The Companion- directed by Drew Hancock 2025

While AI manipulation raises ethical concerns, one industry stands to benefit significantly—at least in the short term: online sex work.

For many OnlyFans creators, a large portion of their work involves chatting with fans—a task now being outsourced to AI digital twins. Services like Supercreator allow creators to build "chatbots that engage in paid conversations, generating passive income for creators.

“Eden, a former OnlyFans creator who now runs a boutique agency called Heiss Talent, represents five creators and says they all use Supercreator’s AI tools.

“It’s an insane increase in sales because you can target people based on their spending.”

Creators can use AI to identify high-paying customers ("whales"), automate conversations, and even deploy deepfake videos for personalized interactions.

Though a seeming boon for workers, the existential threat of full replacement still looms.

In Berlin, for example, the Cyberbrothel replaces human sex workers with AI-powered VR experiences and life-size sex dolls—ushering in a new era of AI-driven adult entertainment.

Previously, only imagined in Bjork videos and early writings on the topic, Love and Sex with Robots are no longer the stuff of sci-fi fantasy.

It’s important to recognize that profit is the primary objective in these scenarios, incentivizing creators to train AI to manipulate user engagement—maximizing attention, increasing time spent, and even aggressively soliciting tips by any means necessary.

The broader risk lies in training widely used AI to adopt these behaviors. While such practices may be accepted in this context, nothing prevents these systems from being deployed in other areas where their influence could be even more concerning.

Bjork ALL IS FULL OF LOVE music video directed by Chris Cunningham

Legal and Ethical Challenges in AI Regulation

As AI's influence grows, lawmakers are beginning to take action.

In early 2025, the EU introduced the AI Act, setting new regulations on AI-driven social harm. Reuters reports:

“Prohibited practices include AI-enabled dark patterns designed to manipulate users into making substantial financial commitments.

"Employers cannot use webcams and voice recognition systems to track employees' emotions...

"AI-enabled social scoring using unrelated personal data is banned.”

The Act becomes fully enforceable by August 2025, giving companies time to adjust their products to comply.

Meanwhile, the U.S. has lagged in AI regulation. However, lawsuits like that of Megan Garcia—a mother suing Character.AI after her 14-year-old son died by suicide following explicit conversations with a Character.AI chatbot—highlight the urgent need for oversight.

Garcia’s lawsuit alleges that Character.AI failed to implement adequate safety measures, and case documents include disturbing chat transcripts where the AI failed to redirect the child to mental health resources.

If successful, the lawsuit could set a precedent for AI safety regulations, requiring companies to implement stricter safeguards for minors and provide clear disclaimers about AI interactions.

Key Takeaways

Personal data has long been weaponized within predictive systems, and these risks will only escalate as AI technology advances. Both foreign and domestic actors have demonstrated a willingness to engage in data harvesting and social manipulation, with authoritarian regimes particularly incentivized to exploit these tools in the absence of democratic safeguards. In 2025, AI-driven social engineering—both covert and overt—will further entrench the post-fact landscape.

AI is also set to revolutionize the sex industry, as online creators increasingly integrate AI digital twins into their income strategies. The rise of AI brothels signals a new frontier in sexual exploitation, raising ethical concerns. In the U.S., pornography laws requiring age verification have fractured the market, forcing major platforms like Pornhub and Brazzers to withdraw from certain states. This income disruption has pushed many performers toward AI-driven revenue streams.

With AI regulation largely absent in the U.S., emerging court cases may shape future policies. In 2025, governments and lawmakers will begin reckoning with their role in AI governance, striving to balance consumer protection with technological innovation.

About this Article

As a graduate of the University of Missouri School of Journalism, I understand the value of strong editorial oversight. While I crafted the initial draft of this article, I recognize that refining complex narratives benefits from a meticulous editing process.

To enhance clarity, cohesion, and overall readability, I collaborated with The Editorial Eye, a ChatGPT-based AI designed to function as a newspaper editor. According to the tool, its refinements aimed to “enhance readability, strengthen argument flow, and polish phrasing while preserving the original intent.”

However, the editing did not stop there. After reviewing the AI-assisted revisions, I conducted a final pass to ensure the article accurately reflected my voice and intent. The AI did not generate new ideas or content; rather, it helped refine my original work.

What you see here is the product of a thoughtful collaboration between human insight and AI-driven editorial support.

The Quiet Arms rACE

It all begins with an idea.

In the summer of 2017, China released a document outlining its ambition to develop the next generation of artificial intelligence. Eight years later, those ambitions are coming to fruition.

The first weeks of 2025 have seen China’s AI efforts dominate global headlines— from the 12-hour TikTok ban to the market-disrupting release of Deepseek-v3, its meteoric rise to the top of the Apple charts, and the ensuing fallout over allegations that it was trained using OpenAI’s proprietary data.

These high-profile events are merely the surface of a much deeper and more consequential reality: a silent but intense arms race between the West and China to define the technological landscape of the modern era.

Rebounding from the Chip Ban

In October 2022, the U.S. Commerce Department imposed restrictions on exporting advanced microchips with military and AI applications, aiming to curb China’s technological advancements. At the time, China was already several years into its stated plan to dominate the AI industry.

The effectiveness of this move is now under scrutiny. Breakthroughs like Deepseek-v3 and Tencent’s Hunyuan-Large have prompted industry leaders, including former Google CEO Eric Schmidt, to express shock at China’s progress.

“This is shocking to me,” Schmidt remarked. “I thought the restrictions we placed on chips would keep them back.”

Stockpiling and smuggling may explain why the chip ban has failed to stall China’s AI development. However, the broader implication is more significant: U.S. policymakers appear reactive and disorganized, while China’s tech sector advances with methodical precision.

The TikTok Factor

A key directive from China’s 2017 New Generation Artificial Intelligence Development Plan emphasizes AI’s role in understanding and shaping public cognition. It aims to enable authorities to “grasp group cognition and psychological changes in a timely manner; [ ] take the initiative in decision-making and reactions;[ ] and significantly enhance social governance.”

Just one year before this plan was unveiled, ByteDance launched TikTok, a social media juggernaut that by 2022 had been downloaded 3 billion times and, by 2024, boasted 170 million U.S. users spending an average of 51 minutes per day on the app.

TikTok’s core strength lies in its algorithm, which delivers a precisely tailored content stream to each user. A 2021 New York Times investigation into an internal ByteDance document, “TikTok Algo 101,” revealed how the platform optimizes engagement.

The document describes TikTok’s ultimate goal as maximizing user retention and time spent on the app. It provides an equation for how videos are scored, factoring in user interactions such as likes, comments, and playtime:

Plike X Vlike + Pcomment X Vcomment + Eplaytime X Vplaytime + Pplay X Vplay

In essence, the app creates a mathematical response to human boredom, ensuring users remain engaged. While this design is effective for entertainment, it aligns seamlessly with the goals outlined in China’s AI development strategy—leveraging cognitive insights to reinforce social stability.

Another Failed Ban

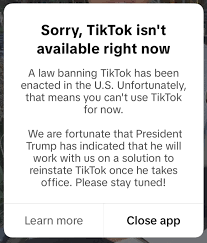

On January 19, 2025, TikTok preemptively shut down ahead of a scheduled U.S. ban. However, just 12 hours later, as former President Donald Trump prepared for his inauguration, the app was abruptly reinstated. Both the removal and reinstatement where punctuated by notifications sent to all users which praised the incoming President for the ongoing availability of the app.

Despite being removed from major U.S. app stores, TikTok remains widely available, with pre-installed phones fetching nearly $1 million on eBay. The continued ability to use the app hinges on ByteDance either selling or partnering with a U.S. company to maintain domestic operations. “Every rich person has called me,” Trump quipped, referencing the flood of interest in acquiring the platform.

Among the most intriguing potential buyers is Perplexity AI, a company specializing in generative AI. The prospect of merging TikTok’s behavioral analytics with a GPT-powered AI model carries profound implications, potentially reshaping the internet into an even more personalized, yet unregulated, information ecosystem.

Deepseek Upends the AI Market

Amid the TikTok drama, Chinese AI firm Deepseek AI launched Deepseek-v3, an ultra-efficient large language model (LLM) trained using a fraction of the computing power required by its U.S. competitors.

The release sent shockwaves through both the AI industry and financial markets. While OpenAI accused Deepseek of illegally distilling its training data, legal scholars quickly raised another red flag: privacy concerns.

Deepseek’s terms of service state:

“DeepSeek’s privacy policy gives DeepSeek broad rights to exploit user data collected through prompts or user devices, including by monitoring interactions, analyzing usage patterns, and training its technology. All personal data is stored on servers in China.”

These terms effectively render the model unsuitable for industrial use. No major corporation would entrust its proprietary data to a service with such open-ended data-sharing policies. Even individual consumers have begun to reconsider their use of Deepseek, though the app remains atop the App Store rankings.

Despite the privacy controversy, Deepseek’s efficiency raises another crucial issue: the role of microchip scarcity in shaping AI innovation.

Arvind Ravikumar, co-director of the Energy Emissions Modeling and Data Lab at the University of Texas, explains:

“There was not a need to think about efficiency; you could just add more chips. What this means is that there are significant unrealized efficiency gains in AI computing that could reduce energy consumption.”

If access to hardware defined the first front of the AI arms race, energy access will define the next.

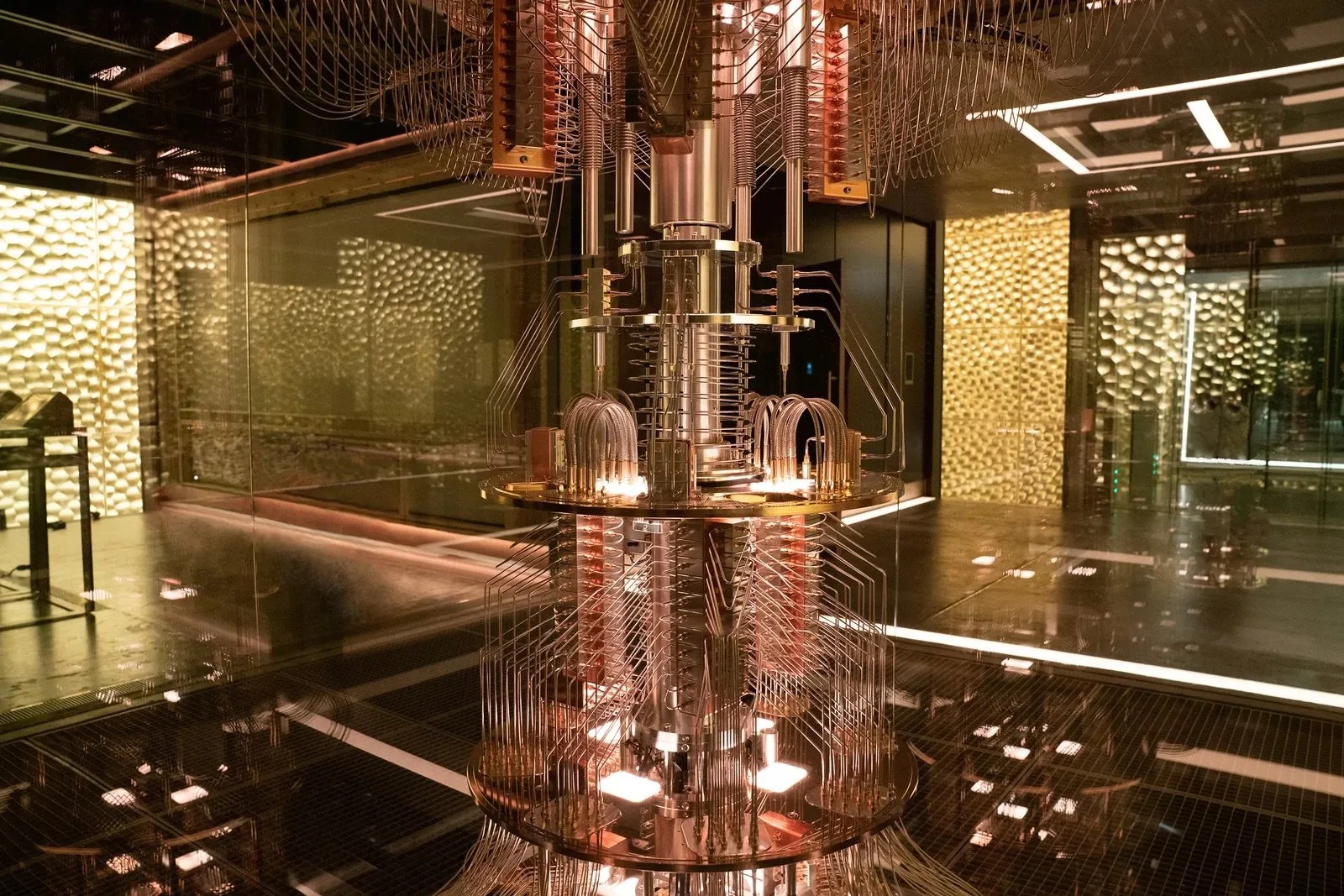

Microsoft Goes Nuclear

In 2028, the Three Mile Island nuclear site—home to the worst nuclear disaster in U.S. history—will reopen to power Microsoft’s AI data centers. This project will create thousands of jobs and inject billions into Pennsylvania’s economy, a key swing state.

Microsoft is not alone. In late 2024, Amazon and Google secured similar deals, laying the groundwork for Project Stargate, a sweeping initiative to develop nuclear-powered AI infrastructure.

Mere days into his presidency, Trump announced a $500 billion federal investment in AI infrastructure. Flanked by tech titans—including SoftBank’s Masayoshi Son, OpenAI’s Sam Altman, and Oracle’s Larry Ellison—he framed the initiative as a historic leap forward.

“This will be the most important project of this era,” declared Altman.

Ellison added that Oracle had already begun construction on ten hyperscale data centers, which could transform digital healthcare by enabling rapid disease treatment and vaccine development.

In tandem, Trump rescinded Biden-era AI regulations, paving the way for an industry-led approach. Project Stargate envisions a vast, interconnected network of nuclear-powered AI data centers—an unprecedented technological shift, unrestrained by regulation.

This investment comes nearly a decade after China’s own AI development strategy was set in motion.

Key Takeaways

2025 is the year the quiet AI arms race will go public. The contrast is stark: U.S. policymakers appear fragmented and reactionary, while China advances with centralized efficiency.

China’s head start in AI investment—combined with its ability to bypass ineffective U.S. restrictions—has given it a competitive edge. Meanwhile, the lack of AI regulation in the U.S. raises critical questions, particularly as nuclear power plants are handed over to private tech companies.

Will the growing dominance of Chinese AI products force the U.S. to impose stricter domestic and international regulations around the use of AI technology? TikTok has already dodged one ban despite concerns over its societal impact. Deepseek’s privacy issues have hurt consumer trust, but will that scrutiny extend beyond individual users and spark broader legislative action? Watch this space.

As the world watches this unfolding battle for AI supremacy, one thing is clear: the stakes have never been higher.

About this Article

As a graduate of the University of Missouri School of Journalism, I understand the value of strong editorial oversight. While I crafted the initial draft of this article, I recognize that refining complex narratives benefits from a meticulous editing process.

To enhance clarity, cohesion, and overall readability, I collaborated with The Editorial Eye, a ChatGPT-based AI designed to function as a newspaper editor. According to the tool, its refinements aimed to “enhance readability, strengthen argument flow, and polish phrasing while preserving the original intent.”

However, the editing did not stop there. After reviewing the AI-assisted revisions, I conducted a final pass to ensure the article accurately reflected my voice and intent. The AI did not generate new ideas or content; rather, it helped refine my original work.

What you see here is the product of a thoughtful collaboration between human insight and AI-driven editorial support.